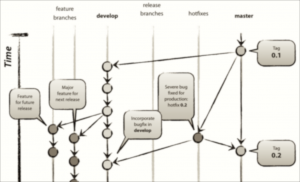

RocketBuild’s preferred tool for peer code reviews. (“git-model @2x” by nvie is licensed under CC BY-SA / Cropped from original)

Or: How I Learned to Stop Worrying and Love the Process

There is a fact underlying any creative endeavor one undertakes, be it painting, crafting, or even computer programming. It is this: a bit of the craftsperson, if they care about their craft, embeds itself into the end product. So when someone critiques that work, it can…you know…feel bad.

Exposing a work to critique is the same as exposing yourself to critique, and we rarely delight in opening ourselves up to judgment. It can make us feel vulnerable, like being naked around strangers. I mean, I assume being naked around strangers is uncomfortable. I wouldn’t know: I’m a never nude.

Uncomfortable as it might be, we at RocketBuild have integrated code reviews into our daily process because it leads to stronger results and a stronger team.

Peer Review vs. Automated Testing

Before any project is released, before any single line of code makes its way into a production branch, it must be both visually inspected and manually tested by another developer. Nothing goes out the door without being exposed to the rigors of unbiased critique.

This is on top of automated testing, which amounts to “programs written to make sure other programs work.” Automated testing verifies for the development team whether or not a program works as intended. Say, for instance, a web page needs to display a user’s email address. I can write a test for that, run the test and if said page does, in fact, display the email address, then the test passes; and if the email address is not displayed, the test fails and I get notified that there’s something wrong. This is all very useful information. It makes maintaining projects in the long term much easier and lends to building a very dependable code base. I can reliably know in moments whether or not the code base works as expected.

Automated testing can’t do everything, though. What automated tests struggle to detect, given their lack of contextual insight, is what we developers lovingly refer to as bad code smell.

Yes, computer code has a scent, and oh yes, it can smell pretty ripe.

An automated test can detect that something happens — can test the result of an action. It does not care how the end result occurs. It lacks the olfactory sense of a good developer.

The Ends Do Not Justify the Means

Take the example from before, where a page should display a user’s email address. As a developer, I have nearly unlimited ways of making this happen:

- I could use a SELECT statement to find the user’s email address in a SQL database and use server side code, maybe a bit o’ Ruby or some Python, to inject the address into the web page before it gets served out by any one of a dozen possible HTTP servers;

- I could build an API, completely separate from the web page itself, and pull the email address into the page via client side scripts;

- Or, I could just, you know… like, type the email address out by hand.

Which one of these sounds easiest to you? The thing is, if I wrote a test just to ensure a specific user’s email address is displayed, that test will pass with any of these implementations, so I can get away with just manually typing out the email address and forego building any of those vastly more complicated solutions.

However, as a human (or so I say), with real context and intuition, I know that while it’s important for that single user’s email address to appear, the website should actually display ANY user’s email address — depending on who’s currently logged in. If I look at the code with my special eyes, and I see a static email address typed out by hand, I’ll catch a whiff of bad code smell, and I can let the original developer know they need to implement a more robust solution. In doing so, I can keep the malodorous code from affecting the project further down the road.

Ask About Code Review

Whether you’re a startup development shop or a client partnering with a technology firm, make sure your programming team has invested in peer code reviews. Sure, it takes some adjustment for the team. Opening yourself up to ridicule is hard, and a colleague taking time to review another programmer’s code does pull him/her away from actually writing his own code.

It makes everything better, though. The reviewer is exposed to the author’s way of solving a problem. The author gets feedback on what is and isn’t working about their code, and how it can be improved. The code is under continuous improvement. While it may take longer initially to build out a project, the code is more flexible, far more able to cope with end users and unexpected use cases, and improvements and features are far easier to add.

In short, a peer-reviewed code base results in long-term savings, all while maintaining a high standard for code and product quality.